Why Choose time-of-flight for your Automotive 3D Sensing Applications

Time-of-flight (ToF) technologies are rapidly gaining momentum for interior and exterior sensing automotive applications. We look at how ToF compares to other 2D/3D sensing technologies, to help you make the right choice for your specific use case.

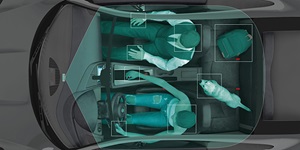

Interior Sensing Use Cases

In-car use cases of time-of-flight (ToF) technologies are growing rapidly, driven by today’s safety needs, comfort requirements, and the growth of autonomous driving. The latest systems target both driver and passenger sensing, their biomechanical and cognitive states, as well as in-cabin monitoring.

Examples of safety functions include NCAP mandated driver fatigue detection, and occupancy/child seat detection for more precise airbag control. Additional safety functions like hands-on-wheel detection, eye gaze, head pose, body pose and advanced seat-belt application are possible with the same ToF camera.

Comfort functions supported by ToF include hand position interaction (intuitive HMI) to operate the sunroof, airco and radio; personalization through body, head and face monitoring; detection of objects left behind in the vehicle; and parcel classification and recognition. In-car security functions generally focus on anti-spoof face detection for trusted driver identification and on-route payment authorization.

Fig. 1. An overview of the main automotive interior and exterior sensing use cases.

Fig. 1. An overview of the main automotive interior and exterior sensing use cases.

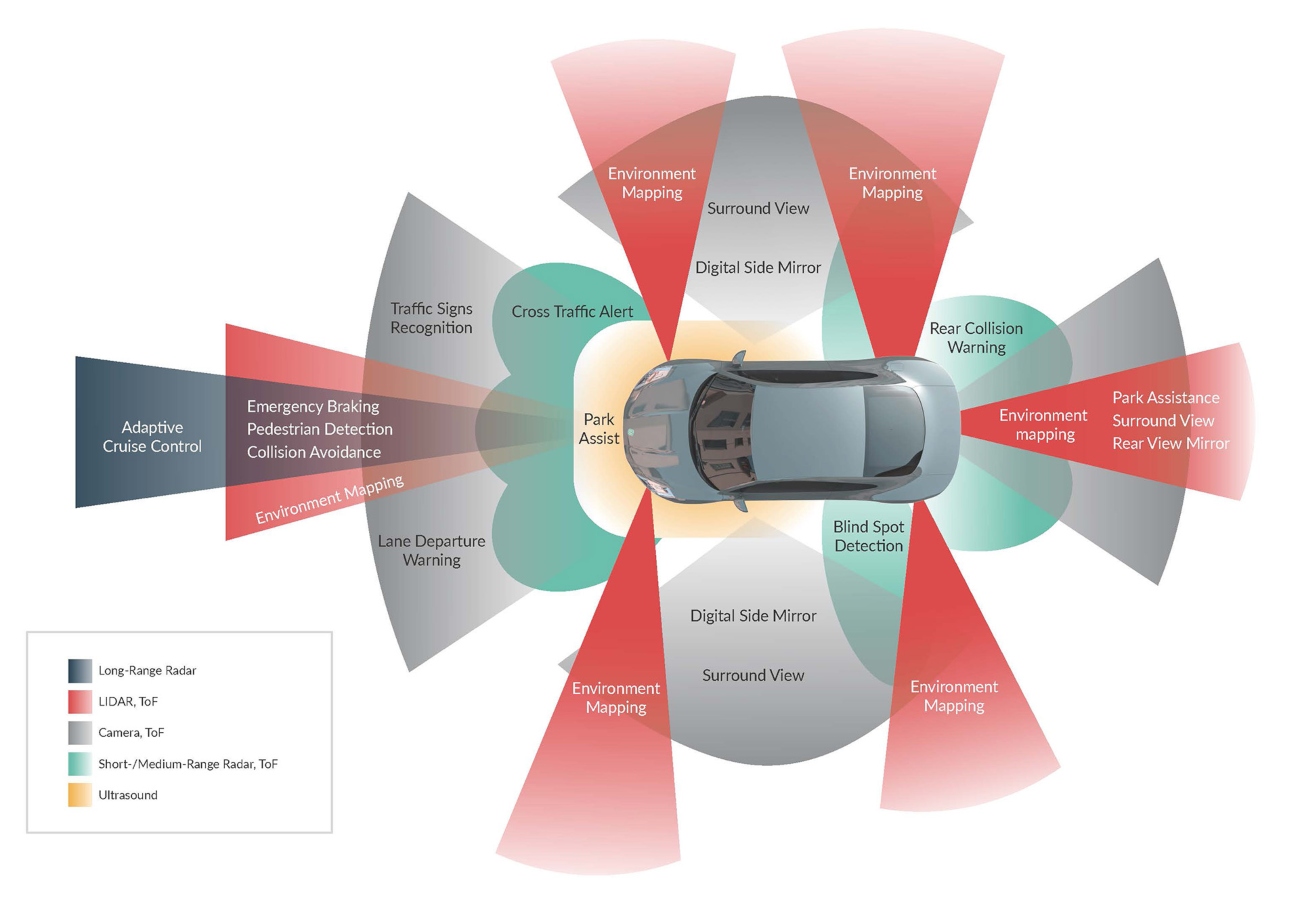

Exterior Sensing Use Cases

Looking into exterior sensing applications of ToF technologies, the continuing expansion of autonomous driving makes it increasingly apparent that traditional 2D cameras will be complemented by RADAR, LiDAR, stereo cameras, thermal cameras, short wavelength infrared cameras and/or ToF cameras. The right arsenal of sensors will be able to cover the “blind spots” that can occur in an automotive environment. Exterior use cases for ToF technology include short-range ADAS exterior cocooning and advanced parking assist.

Fig.2. Complementary range sensing and 3D technologies used in a modern car.

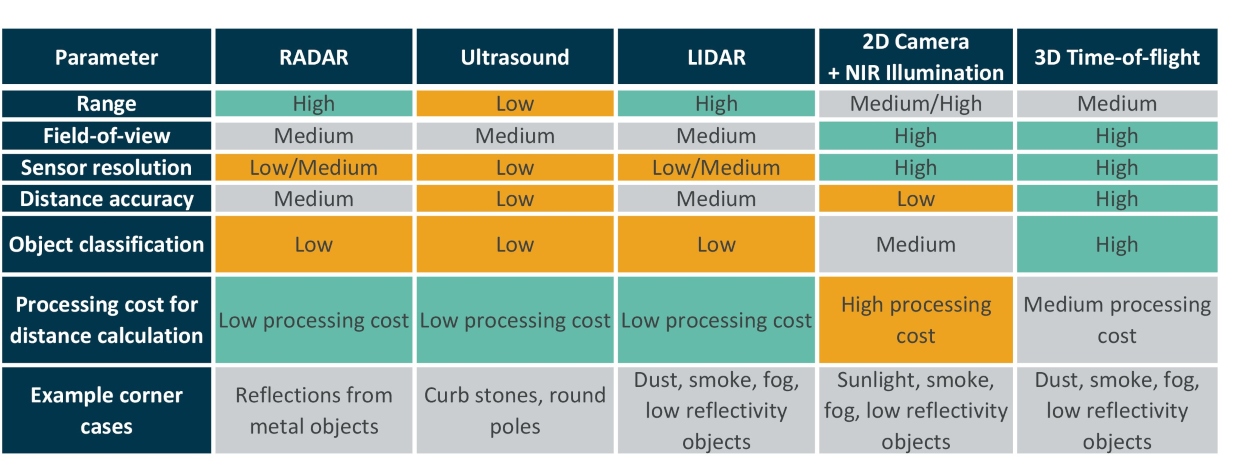

So how does ToF compare to and complement other available technologies for exterior sensing? Following is a basic overview of the technology landscape. For each technology we outline its operating principle, its pros and cons, and suggest what use case it fits best. Firstly though, we need to understand the basics of time-of-flight.

A note on definitions

LiDAR and time-of-flight are often used generically and/or incorrectly depending on the context or industry being discussed. In its most generic sense, LiDAR covers all different time-of-flight principles, whether direct or indirect. Within Melexis and therefore in this article, LiDAR refers specifically to LiDAR scanning technology (as per the definition provided later). While time-of-flight would normally refer to the principle of measuring distance made by light in a lapse of time, for Melexis, time-of-flight refers to Continuous Wave time-of-flight, also as explained later.

Optical time-of-flight (ToF)

This sensing technology is able to detect people and objects, their absolute position, movement and shape in 3D. Optical ToF systems use infrared active illumination. This illumination source - an LED or VCSEL (Vertical-Cavity Surface-Emitting Laser) - illuminates a scene through beam-shaping optics. Once this light has reflected off the scene, a ToF camera records the reflected light and measures the elapsed time or phase-shift from the reference. The distance is then calculated based on the total flight time of the light (at the speed of light!), hence the term “time-of-flight”.

There are broadly two ToF methods in use: direct and indirect.

Direct ToF (dToF)

Direct ToF or dToF is so-named because the sensor measures time directly, by means of a very accurate time base. LiDAR is an example of dToF. As short-range accuracy would suffer from small changes in the internal timing, most LiDARs are built for medium or long ranges (>100m). dToF electronics are complex and therefore do not scale well to high resolutions. Scanning techniques (mechanical, MEMS, …) are adopted to increase LiDAR resolution.

Indirect ToF (iToF)

The second approach is called indirect ToF or iToF because the sensor measures the phase shift between a periodic reference signal (signal modulation) and its received reflection from the measured object. iToF is less sensitive to drift of internal timing so is more suited to shorter distances. Two methods for indirect ToF can be distinguished: gated ToF (gToF) and continuous wave ToF (cwToF).

Melexis solutions are based on the continuous wave ToF (cwToF) methodology as this can be implemented with CMOS image sensor technology. In addition, cwToF sensors are more robust (linear) over a wide temperature range and are less impacted by light pulse variations over lifetime and temperature.

State-of-the-art automotive cwToF sensors generate real-time 2D and 3D video at VGA (640 x 480 pixel) resolution at high frame speed (over 100 fps). Melexis cwToF technology is particularly robust in dynamic sunlight.

ToF Sensor Output Data

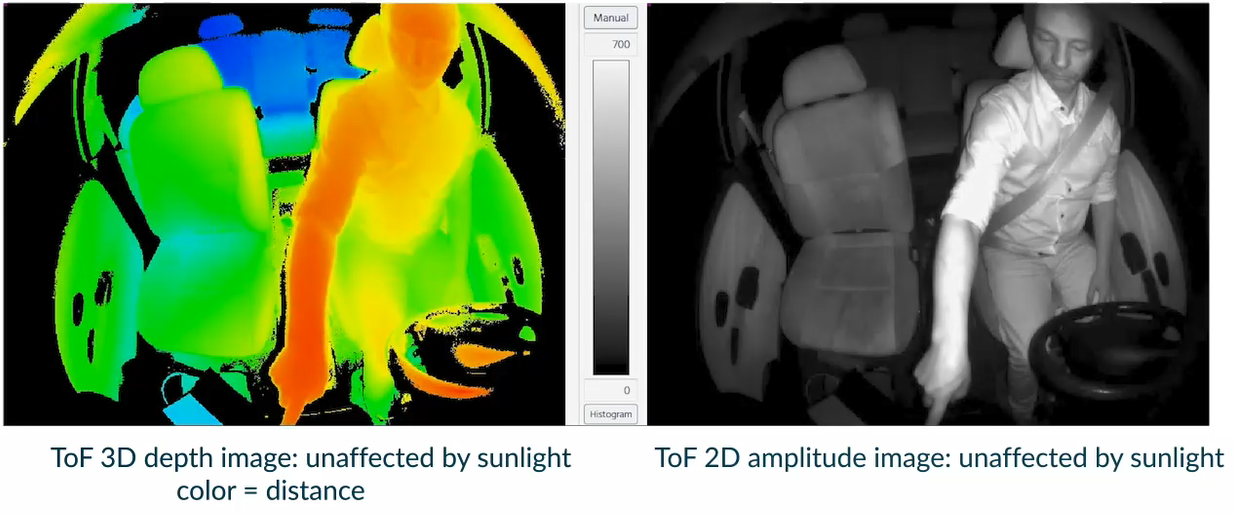

cwToF addresses many interior sensing use cases due to its unique combination of high resolution depth and amplitude (greyscale) mapping. This enables complex use cases for identifying people and objects without the necessity for a color or greyscale contrast between the foreground and background: classification is performed on 3D depth differences.

ToF cameras provide two simultaneous outputs. The first is a video stream of depth images. During evaluations, these video streams are typically displayed as a false color image where the color is representative of the depth, or as a point cloud. During production, the video streams are processed by detection algorithms and/or neural networks. The second stream provides amplitude images (also called confidence images) representing the intensity of the reflected signal.

Fig. 3. An example of an in-car recording with Melexis cwToF. Left: 3D depth video stream. Right: amplitude/confidence near infrared 2D video stream, unaffected by sunlight.

ToF and the Vision Landscape for Interior Sensing

The first 3D ToF-based systems entered automotive volume production in 2015. The system is mounted in the roof module and features a QVGA resolution (320 x 240 pixels) and a narrow field-of-view. Its main function is hand gesture detection for infotainment control. In 2019 the volume of Melexis ToF sensors in cars passed the one million mark.

In parallel, first Driver Monitoring Systems (DMS) for detecting driver fatigue are generally based on conventional 2D cameras.

When high distance accuracy is vital, ToF offers a clear advantage. ToF sensors generally offer better depth accuracy than image processed 2D camera video streams. For example, over a distance of one meter, interior sensing ToF systems typically offer a distance precision of 1% (so 1 cm) with one VCSEL. Several sources report that such precision cannot be reached with today’s automotive 2D cameras.

Moreover, ToF based systems require less image processing resources versus 2D based systems for extracting depth information, so similar detection algorithms can run on smaller processors.

Sunlight robustness is another potential benefit of ToF systems. On top of sunlight rejection by means of an optical filter – a technique that is applied by both 2D and 3D ToF cameras – ToF cameras feature an additional sunlight rejection technique: ToF pixel read-out. This is a differential mode read-out, which rejects “DC“ optical stimuli like unmodulated sunlight. This sunlight invariance improves detection robustness and simplifies the development of detection and classification algorithms. This in turn shortens algorithm development cycles and/or reduces training set sizes for AI deep learning.

ToF and 3D+Vision Landscape for Exterior Sensing

Fig. 4: Comparison between different technologies.

Fig. 4: Comparison between different technologies.

2D Cameras

2D cameras are a widely adopted technology for automotive vision and sensing. They form the basis of Advanced Driver Assistance Systems (ADAS), Surround View Systems (SVS) and Driver Monitoring Systems (DMS). When coupled with infrared lighting they can also perform at night. 2D cameras rely on powerful processors, algorithms and neural networks to process those images. Most OEMs use cameras on autonomous cars, often in combination with RADAR, LiDAR or other technologies. The perfect sensor mix and amount of sensors to achieve “autonomous driving” safety is currently under heavy debate.

Stereo vision is based on the use of two cameras that need to be accurately positioned relative to each other. When correlating the two images it is possible to generate a depth map. This correlation is not always possible and depends on factors such as the lighting conditions and presence of structure in the observed scene.

RADAR

RADAR (radio detection and ranging) uses radio waves to detect objects at a distance, and define their speed and disposition. It has been used for decades to accurately calculate the position, speed and direction of all types of vehicles. RADAR is used in many modern cars for hazard detection and range-finding in features like Advanced Cruise Control (ACC) and Automatic Emergency Braking (AEB). These technologies depend on the information from multiple RADAR sensors which is interpreted by in-vehicle computers to identify the distance, direction and relative speed of vehicles or hazards. Unlike optical solutions such as ToF and 2D cameras, RADAR is virtually impervious to adverse weather conditions, working reliably in dark, wet or foggy conditions. The limited resolution and “fuzziness” of current 24 GHz sensors is being addressed by the introduction of more accurate 77 GHz RADAR sensors.

RADAR and ToF should be considered as complementary technologies. ToF uses infrared light from lasers as illumination source while RADAR uses radio waves. Each technology has their own corner cases. Generally, in a side-by-side comparison, RADAR has a longer range and performs better in adverse weather conditions. ToF has a shorter range but will not suffer from reflections (e.g. in city environments) and offers higher resolution and distance accuracy. Such differences result in different use cases which actually become complementary for critical applications.

LiDAR

LiDAR (Light Detection and Ranging), also called 3D laser scanning, works similarly to RADAR but instead of radio waves it emits rapid laser signals, sometimes up to 150,000 pulses per second, which bounce back from an obstacle. A sensor measures the amount of time it takes for each pulse to bounce back (see paragraph “Direct ToF”). Advantages of LiDAR include accuracy and precision. It gives self-driving cars a 3D image of the surroundings over a long range. LiDAR is extremely accurate compared to cameras because the lasers aren’t fooled by shadows, bright sunlight or the oncoming headlights of other cars. Disadvantages include cost, large size, interference, jamming, and limitations in seeing through fog, snow and rain. LiDAR also does not provide information that cameras can typically see, such as the words on a sign, or the color of a stoplight.

As with RADAR, LiDAR is rather a complementary technology to indirect ToF. Compared to ToF, high-resolution (scanning) LiDARs offer a longer range but come at a (much) higher price. Lower resolution solid-state flash LiDARs still offer a longer range versus ToF, but generally with lower resolution and lower depth accuracy.

Ultrasound

Ultrasonic sensors send out short ultrasonic impulses which are reflected by obstacles. The echo signals are then received and processed. Ultrasonic sensors perform well in bad weather such as fog, rain and snow as well as low light situations and are relatively inexpensive. Drawbacks include low reaction time, a limited field of view and lower accuracy than LiDAR. In addition, corner cases for ultrasonic sensors include detecting small objects or multiple objects moving quickly.

In exterior cocooning and automated parking applications, ToF has the potential to displace ultrasound sensors. This is mainly because of the high resolution and high detection speed of time-of-flight sensors, which solve many corner cases of ultrasonic sensors. Unfortunately ToF sensors are generally more bulky. This is somewhat compensated by the ability to cover a wide area with fewer ToF sensors.

FIR

A FIR (Far Infrared) camera or thermal camera collects heat radiation from objects, people and surroundings. By sensing this infrared spectrum that’s far above visible light, FIR cameras access a different band of the electromagnetic spectrum than other sensing technologies do. It can thus generate a new layer of information, enabling vehicles to detect objects that may not otherwise be perceptible to cameras, RADAR or LiDAR. A FIR camera works well in most environmental conditions. However, it does not provide distance or depth of field information, but a thermal array (a 2D video stream). It detects living things easily, but is less good at detecting static objects at the same temperature as the background. FIR and ToF are complementary rather than competitive technologies.Conclusion

It’s clear from this concise overview that autonomous cars need to combine multiple sensing technologies to do what a human driver can do, and the various technologies need to be used in an overlapping and complementary manner. Optical time-of-flight is one such technology, and the latest ToF sensors now available will ultimately define the autonomous driving experience that is being envisaged, both for interior sensing use cases as well as for external cocooning applications.

Melexis is at the forefront of such developments and in particular fully confirms the appropriateness of continuous wave ToF for in-cabin applications. We designed and manufactured the first automotive qualified ToF sensor, which entered volume production in 2015. Just four years later we reached the impressive milestone of having more than one million ToF 3D camera ICs on the road, proving the maturity of this technology in automotive.