传感器技术决定汽车行业未来

作者:Vincent Hiligsmann,迈来芯 (Melexis)

目前传感器作为现代的汽车设计必不可少的一部分,来满足各种各样的需求。它们对于帮助汽车生产企业生产出满足更加安全,能耗更低以及更加舒适的车型起到了很大的作用。未来,传感器还能够帮助车辆提升其自动化程度,从而惠及整个产业。

智能观测

除了完全可控性和数据处理能力之外,智能观测性也是实现汽车自动驾驶的先决条件之一。为实现完全的可观测性,汽车需要处理各种参数数据,包括速度、电流、压力、温度、定位、接近检测、手势识别等等。近年来,接近检测和手势识别技术取得了巨大进步,同时超声波传感器和飞行时间 (ToF)开始应用在汽车中。

除了完全可控性和数据处理能力之外,智能观测性也是实现汽车自动驾驶的先决条件之一。为实现完全的可观测性,汽车需要处理各种参数数据,包括速度、电流、压力、温度、定位、接近检测、手势识别等等。近年来,接近检测和手势识别技术取得了巨大进步,同时超声波传感器和飞行时间 (ToF)开始应用在汽车中。

超声波传感器

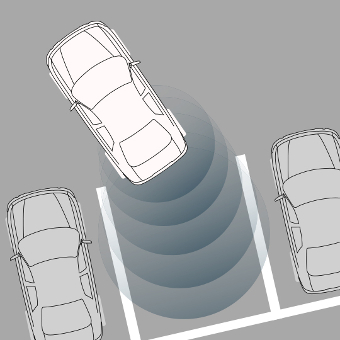

随着车辆自动化程度的提高,我们不仅依靠新技术所带来的前所未有的革新,而且需要见证更多成熟的汽车技术被应用到新的自动驾驶场景中去。比如说目前倒车雷达作为超声波传感器的典型应用,还只是安装于保险杆的辅助驾驶系统。这个系统的局限性在于驾驶速度不能超过10公里/小时,并且在近距离检测中不能做到100%的距离精确测量。然而在自动驾驶的车辆中,超声波传感器能够与射频雷达、摄像头和其他传感器技术结合,提供更加完善的距离测量功能。

手势识别

超声波传感器技术用于观测外界,而 ToF 摄像头则专注于汽车内部。过渡到无人驾驶将是一个循序渐进的过程,因此在某些特定情况下,驾驶员需要从无人驾驶模式切换回手动模式,这一点非常重要。

目前,借助高级辅助驾驶系统 (ADAS) 机制,汽车仅能部分地实现无人驾驶,驾驶员随时可能需要进行人为干预。预计未来几年内,汽车行业将进一步提高自动化水平,但即便如此,驾驶员在特定环境下(例如,汽车在市中心行驶时)仍需要进行手动控制。要改变这种状况,还需要相当长的一段时间。在实现无人驾驶之前,汽车需要为驾驶员提供警示。因此,实时监控驾驶员的位置和活动至关重要。尽管 ToF 技术目前仍处于起步阶段,但它已经开始在汽车中得到应用,例如当驾驶员精力不集中时,该技术可提醒驾驶员注意,并使车辆驶向路边。此外,它还可以基于手势识别实现各种不同的功能,例如,通过手部滑动手势增大收音机音量或接听来电等。然而,ToF 的潜在应用远不只于此,随着人们对更先进自动驾驶技术的不断探索,它将会发挥出更为关键的作用。ToF 摄像头将能够以三维方式描绘驾驶员整个上半身的姿态,从而确定驾驶员的头部位置是否面向前方的道路,以及他们的双手是否放在方向盘上。

目前,借助高级辅助驾驶系统 (ADAS) 机制,汽车仅能部分地实现无人驾驶,驾驶员随时可能需要进行人为干预。预计未来几年内,汽车行业将进一步提高自动化水平,但即便如此,驾驶员在特定环境下(例如,汽车在市中心行驶时)仍需要进行手动控制。要改变这种状况,还需要相当长的一段时间。在实现无人驾驶之前,汽车需要为驾驶员提供警示。因此,实时监控驾驶员的位置和活动至关重要。尽管 ToF 技术目前仍处于起步阶段,但它已经开始在汽车中得到应用,例如当驾驶员精力不集中时,该技术可提醒驾驶员注意,并使车辆驶向路边。此外,它还可以基于手势识别实现各种不同的功能,例如,通过手部滑动手势增大收音机音量或接听来电等。然而,ToF 的潜在应用远不只于此,随着人们对更先进自动驾驶技术的不断探索,它将会发挥出更为关键的作用。ToF 摄像头将能够以三维方式描绘驾驶员整个上半身的姿态,从而确定驾驶员的头部位置是否面向前方的道路,以及他们的双手是否放在方向盘上。

人类对无人驾驶体验有着种种构想,目前正在开发的下一代传感器将成为最终的决定因素。

交通状况三维图像

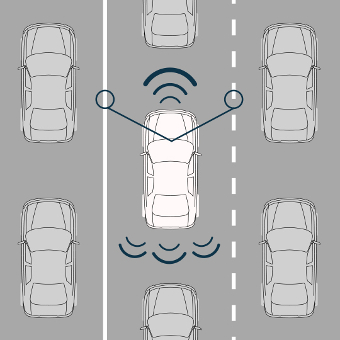

今天的自适应巡航控制系统利用雷达来测量汽车与前方车辆的距离。这种技术在高速公路上表现良好,但在城市环境中,由于距离更短,而且行人和/或车辆的靠近方向更加多样,因此需要进行更精确的位置测量。

今天的自适应巡航控制系统利用雷达来测量汽车与前方车辆的距离。这种技术在高速公路上表现良好,但在城市环境中,由于距离更短,而且行人和/或车辆的靠近方向更加多样,因此需要进行更精确的位置测量。

一种解决方案是增加摄像头,这样可以更好地确定距离。然而,目前的图像处理硬件还无法以所需的速度以及确保安全驾驶的可靠性检测所有重要特性。而这恰好是激光雷达的优势所在。激光雷达的工作原理与雷达相同,都是以测量发射信号的反射信号为基础。雷达依赖于无线电波,而激光雷达则运用光束(例如激光)。通过测量发射脉冲与收到该脉冲反射信号之间经过的时间,来计算与物体或表面的距离。激光雷达的最大优点在于,相比雷达能够检测更小的物体。与摄像头在焦平面上进行环境观测不同,激光雷达可以进行精确的、相对详细的 3D 渲染。借助这种特性,无论光照条件如何(白天还是夜晚),激光雷达都可以轻松地将物体与前后方物体区分开来。随着激光雷达技术价格日趋下降,以及相关技术的进一步发展,这种方法将得到更加广泛的应用。

人类对无人驾驶体验有着种种构想,目前正在开发的下一代传感器将成为最终的决定因素。在上述创新成果的推动下,未来的汽车将能够提供清晰且持续更新的周遭状况,随时掌握外部环境及车内人员的动态。因此,感应技术将成为决定未来汽车行业发展的关键因素。